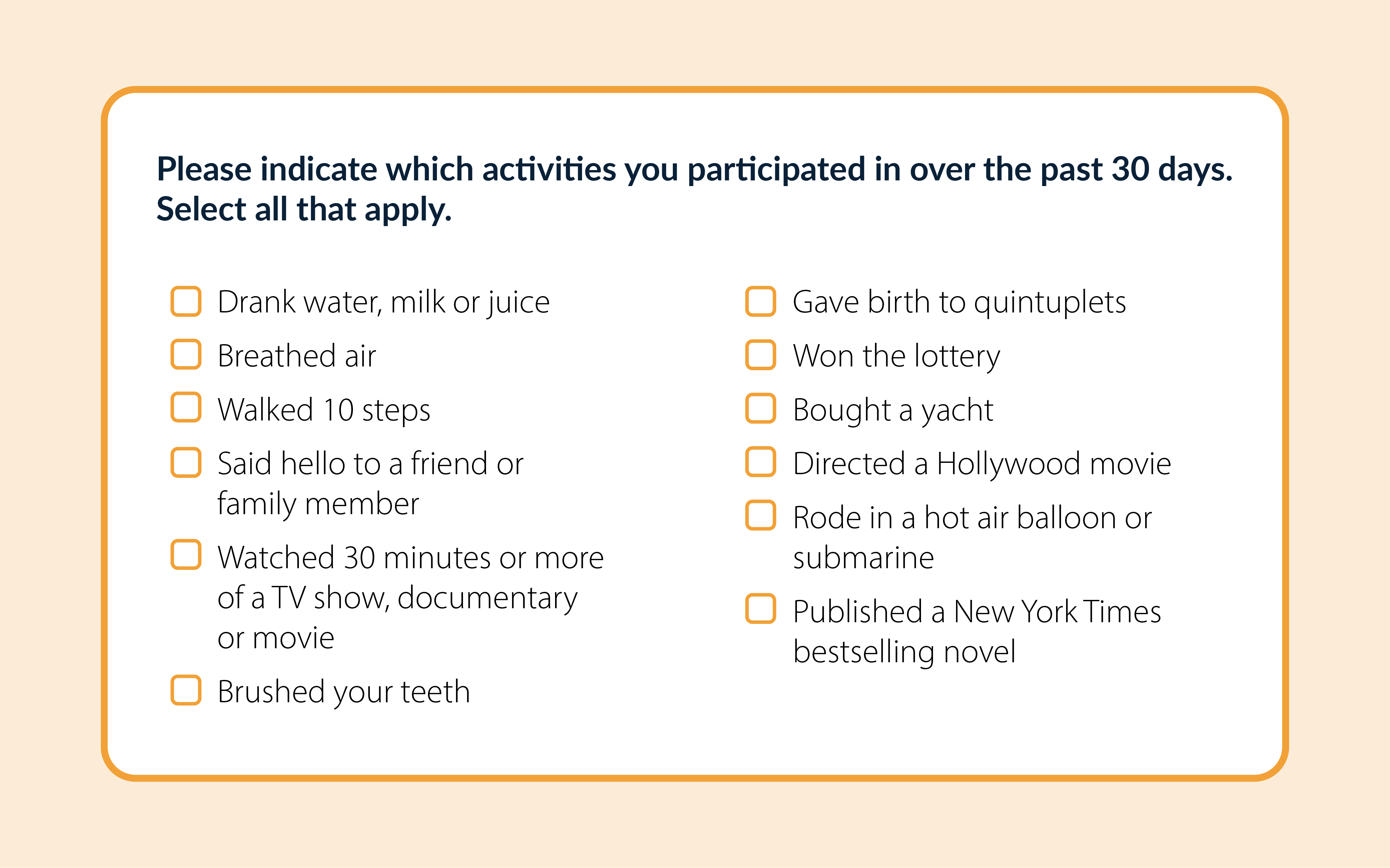

Cognitive theory by Stanford professor Jon Krosnick states survey respondents take certain mental shortcuts to provide quick, “good enough” answers (satisficing) rather than carefully considered answers, also known as optimizing1.

Note: Respondents who “optimize” must execute four stages of cognitive processing to answer survey questions optimally. Respondents must:

- Interpret the intended meaning of the question

- Retrieve relevant information from memory

- Integrate the information into a summary judgment

- Map the judgment onto the response options offered