Scientifically identifying the most persuasive messages to encourage vaccination. Significant investments have been made toward the rapid development of a safe and effective vaccine against SARS-CoV-2 (COVID-19). Even though we…

Update: dbt at Civis has gotten even better! Read the latest here

Abstract

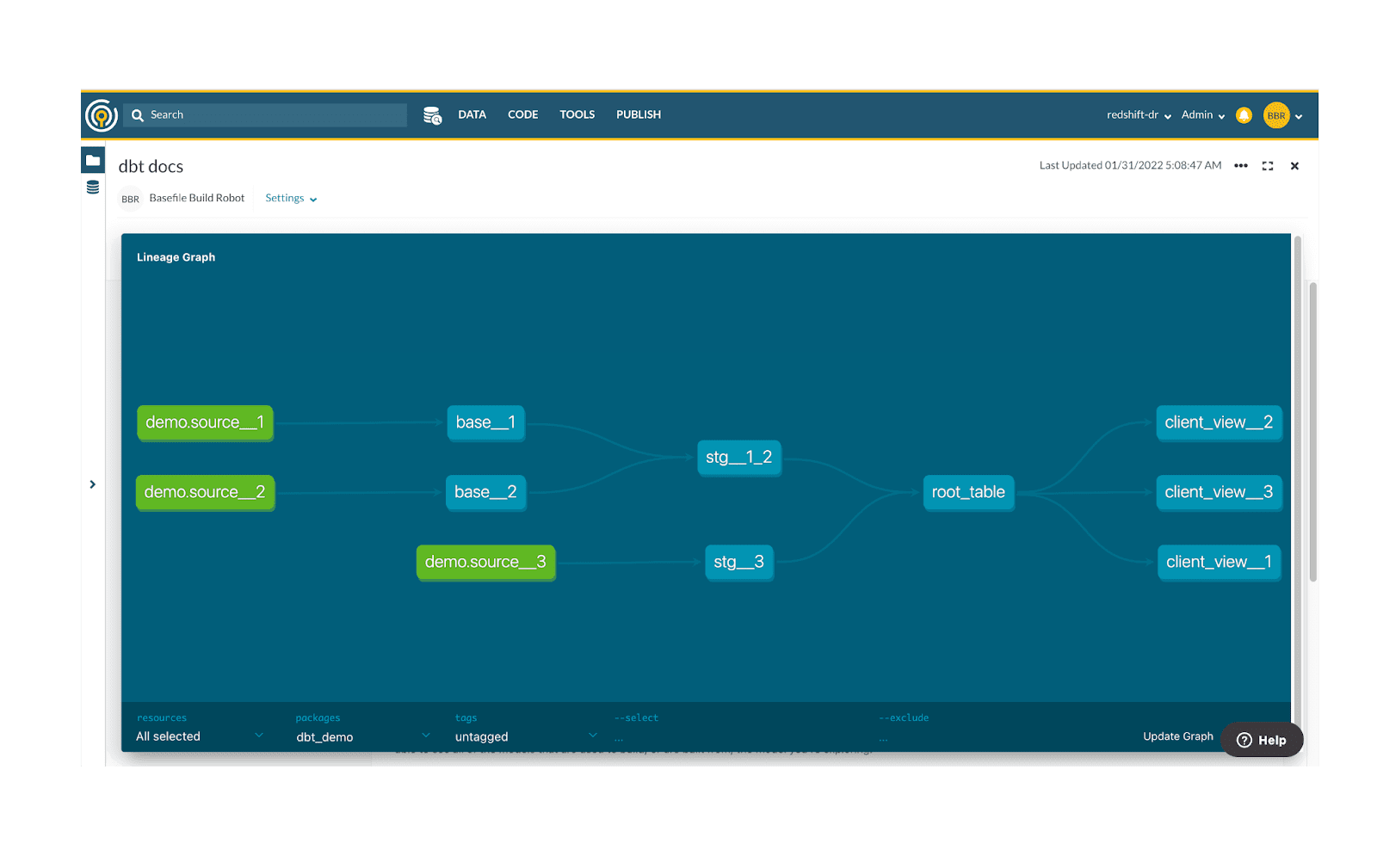

For data practitioners who use dbt — an open source tool that facilitates SQL data transformations — it’s hard to imagine a life without it. Data engineers and data scientists at Civis Analytics feel no different. But there is more to the modern data stack than just dbt. Keep on reading to learn more about how data teams at Civis use Civis Platform in conjunction with dbt to build and maintain stable, reliable, observable, and discoverable data pipelines.

Introduction

With the right database credentials and a few keystrokes in a terminal, it is clear how dbt4 became the preferred multi-tool for orchestrating, testing, and documenting SQL pipelines.

But what happens after breakfast?

After breakfast, you realize that your dbt pipeline has limited utility, since it relies on data sources that go stale over time, and modeled data is only available to consumers within your data warehouse. After lunch, you realize that there are certain data modeling tasks that cannot be responsibly accomplished with SQL (I’m looking at you, machine learning). After happy hour, you realize that your prized dbt artifacts (test results, documentation, etc.) are challenging to share across teams at your company.

These are not challenges we have at Civis, because the Civis Platform offers solutions to all of these problems. After breakfast, data teams at Civis get to have their dbt cake and eat it, too. In no particular order, Platform supports the E (extract), the T (transform), and the L (load) stages of batch data processing, with an emphasis on cross-team collaboration.

Keep on reading to learn more about how we use dbt at Civis, and how Civis Platform supports the end-to-end data pipeline journey.

Data Pipelines and Platform Workflows

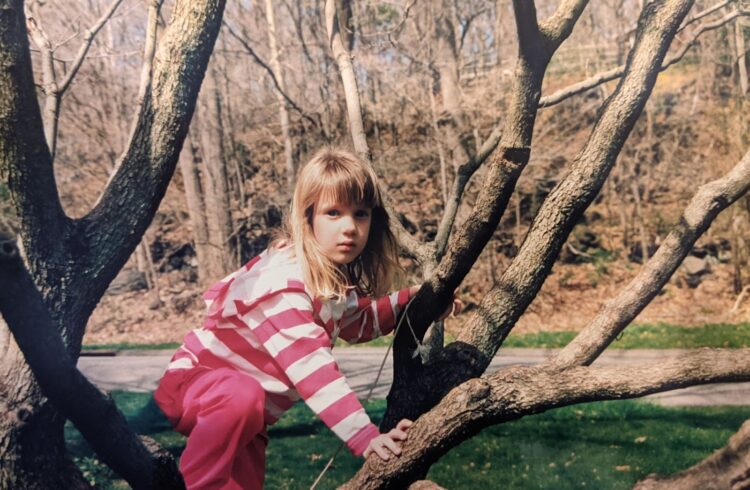

It seems wrong to talk about the end-to-end data pipeline journey without talking about directed acyclic graphs (DAG). Incidentally, I have been building DAGs for decades. Hot summers and hopscotch make good data engineers.

Look at how she so effortlessly applies transitive reduction to those chalk squares!

—Alice, childhood friend, age 7

A lot has changed since my analog days of chalk on pavement and my farcical encounters with seven-year-olds. Now, I am responsible for a different kind of DAG: a data pipeline that refreshes Civis’s core data asset and releases it to Civis Data subscribers. This pipeline is orchestrated by Civis Platform Workflows — a workflow management system that runs containerized jobs in a specified sequence.

“DAG” is common parlance at Civis Analytics, not because we care about the acronym du jour, but because DAGs help us do our job. Moving, transforming, and modeling data requires a strict ordering of events in the same way that advancing across a hopscotch board requires physical coordination. (If your left foot is on 2 and your right foot is on 10, something is probably broken.) Platform Workflows are a boon to data scientists and engineers who need strong guarantees on when and how a set of jobs should run.

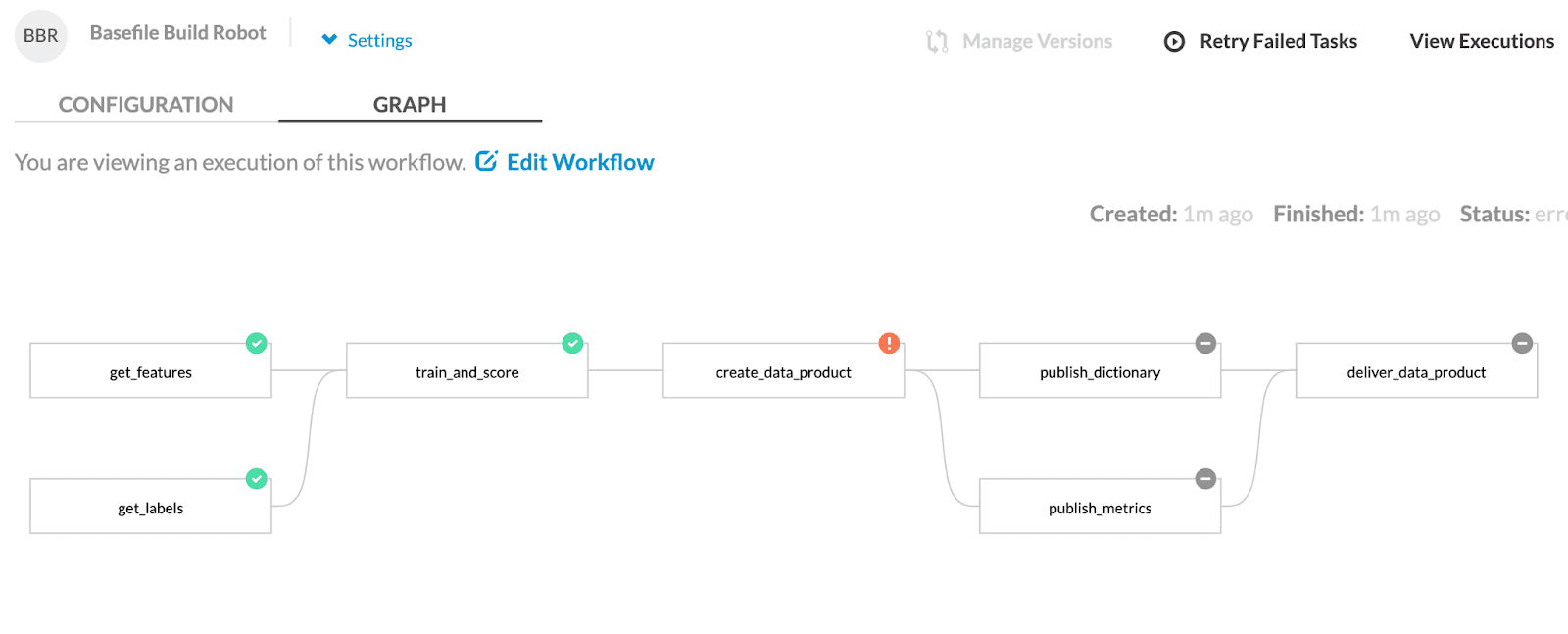

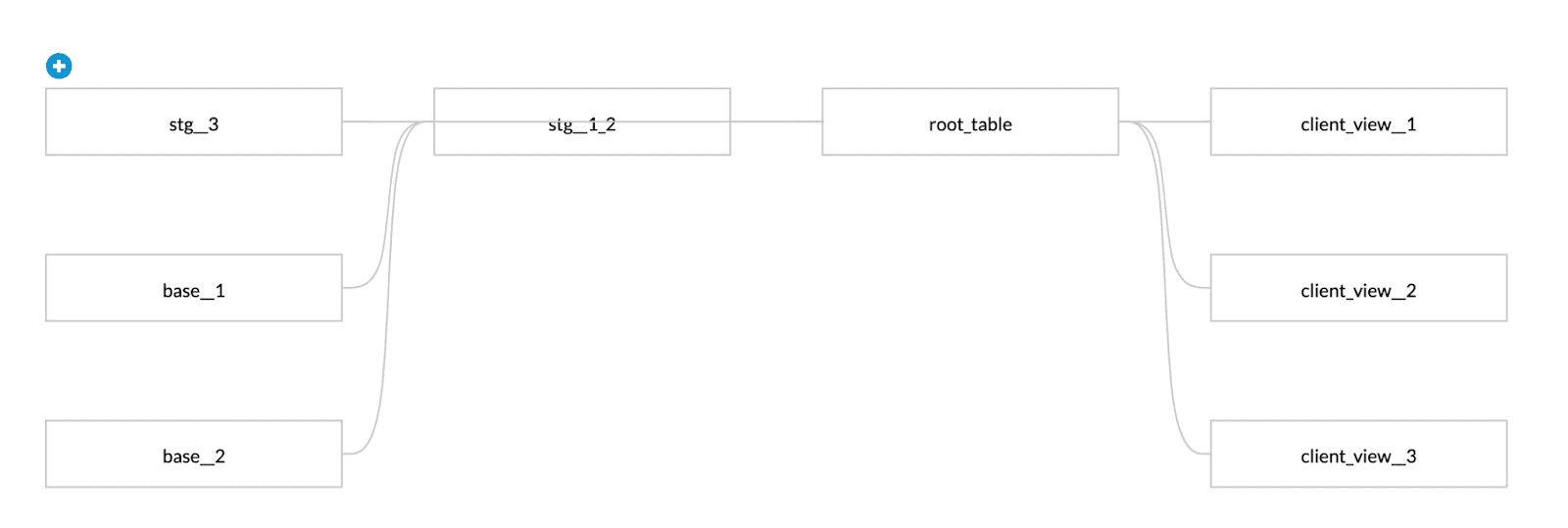

Like many data teams at Civis, Platform Workflows make a lot of sense because we run the same sequence of jobs on a regular basis as source data is updated. For sufficiently complex pipelines, however, doing work is half the battle. (I have never met a data pipeline that doesn’t require a human in the loop.) In a very tangible way, Platform Workflows document the state of a data pipeline such that task dependencies and outcomes can be understood with minimal cognitive overhead. This is accomplished in the Platform UI by means of a DAG — my team’s shared source of truth about key milestones in our pipeline. See for yourself:

In the above example, you can see an ETL pipeline that looks a lot like the one my team maintains. It does things like:

- [PYTHON] Retrieve data from two external sources, and write to Redshift tables: get_features, get_labels

- [R] Train a predictive model on target labels and make predictions using the fitted model: train_and_score

- [SQL] Curate a client-facing dataset with predicted scores: create_data_product

- [PYTHON] Update internal documentation: publish_dictionary, publish_metrics

- [PYTHON] Unload final dataset to S3 and copy to client cluster: deliver_data_product

Platform Workflows and dbt

So where does dbt fit into all of this?

As you may have noticed in the above example, Platform Workflows can run SQL scripts. In theory, Platform Workflows can run an arbitrary number of SQL scripts with an arbitrarily complex dependency network. However, as with most software solutions, can does not imply ought: just because you can draw a hopscotch board spanning an entire city block doesn’t mean you should use it as your primary mode of transportation for getting from point A to point B.

Assuming that your data is warehoused in Redshift and your dbt project is committed to a GitHub repository, dbt can be executed in a Docker container in Civis Platform. Instead of writing SQL DDL and DML commands and arranging them “by hand” in a Platform Workflow, we execute dbt run in a Platform Container Script, as shown here:

It really is that simple!

Here is a glimpse of what life was like before we migrated our SQL pipelines to dbt. Behold: eight CREATE TABLE or CREATE VIEW statements that are manually stitched together through a Platform Workflow. To design this workflow, table dependencies need to be known apriori by inspecting raw SQL. To make pipeline runs idempotent, each CREATE TABLE statement is preceded by a DROP IF EXISTS statement.

As much as we love Platform Workflows, here is an example of how not to use them:

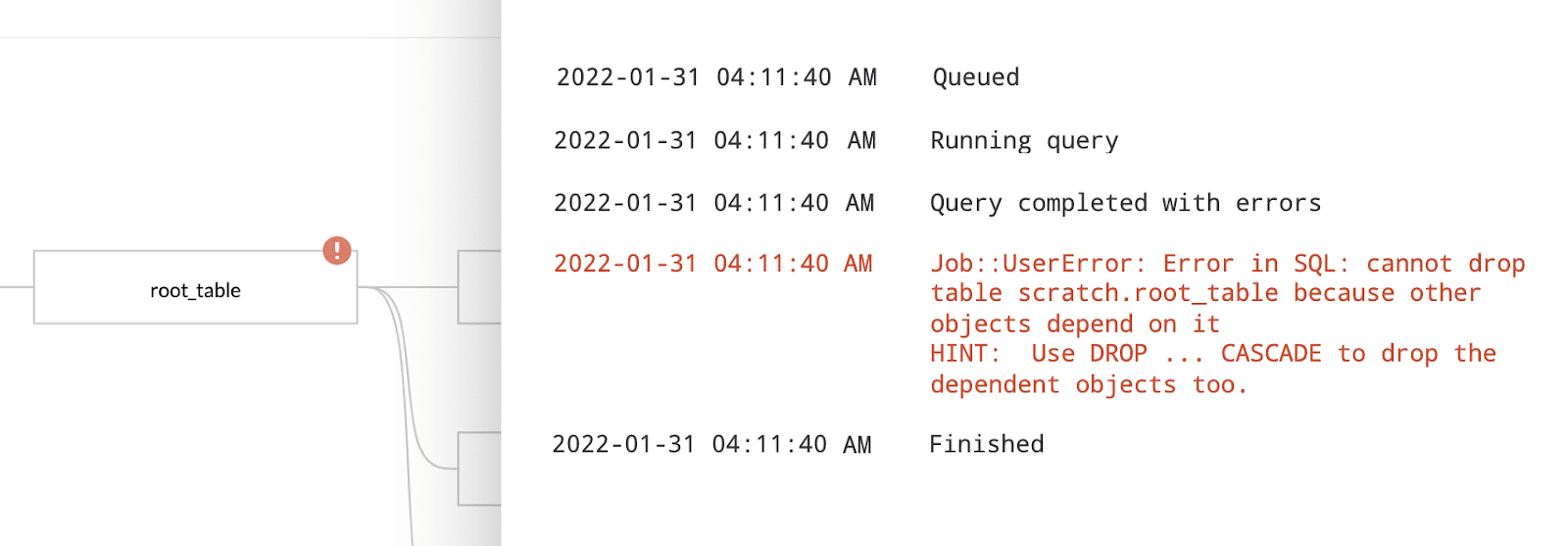

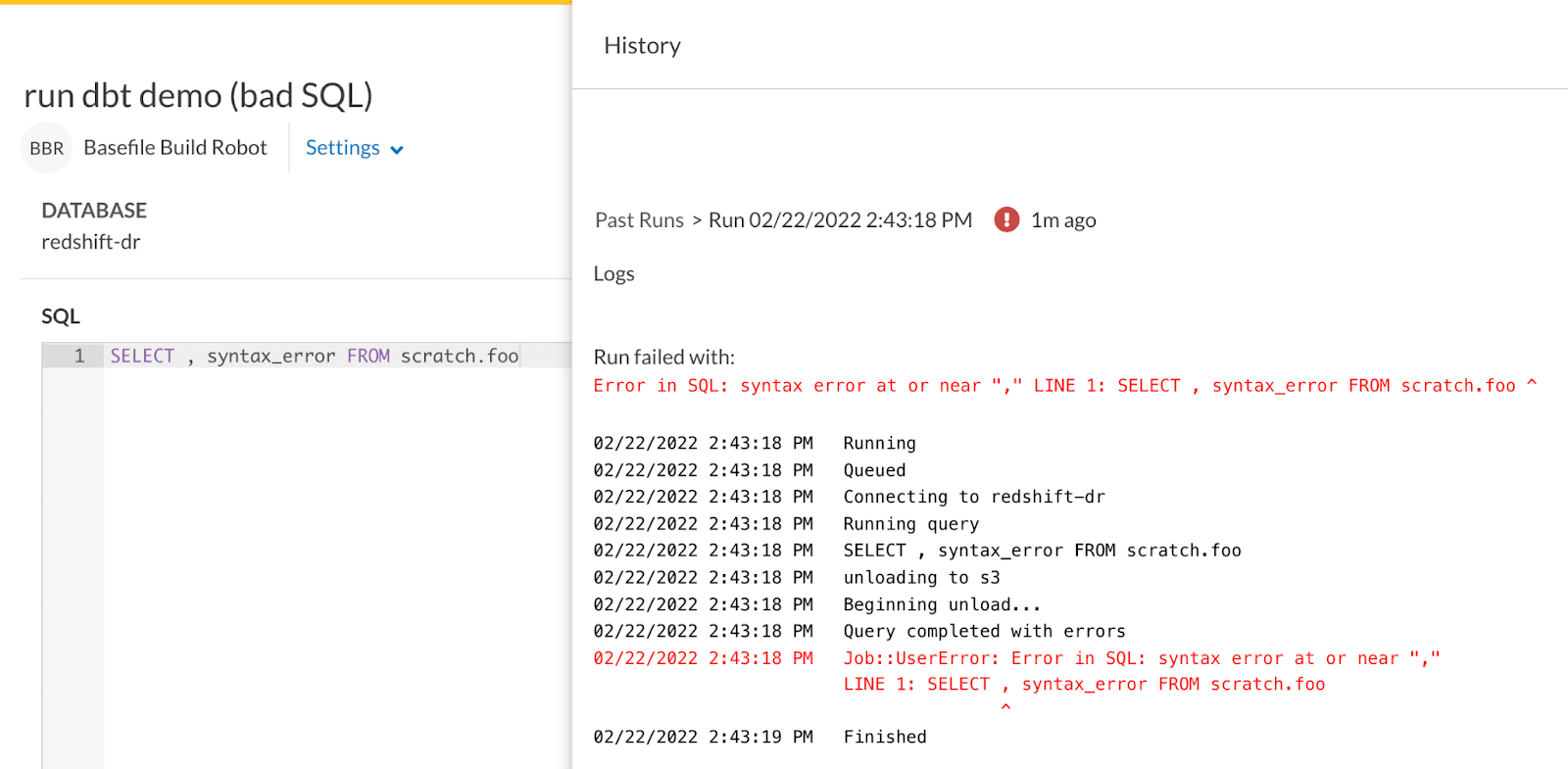

Managing Workflow side effects (usually database objects) was a non-trivial task, and errors like the one below were common:

The testing and documentation of this data pipeline were handled separately using bespoke Python packages that were often in a state of disrepair or neglect due to high maintenance burdens and poor usability features. Migrating to dbt was the obvious choice, as it solved many of these problems out of the box.

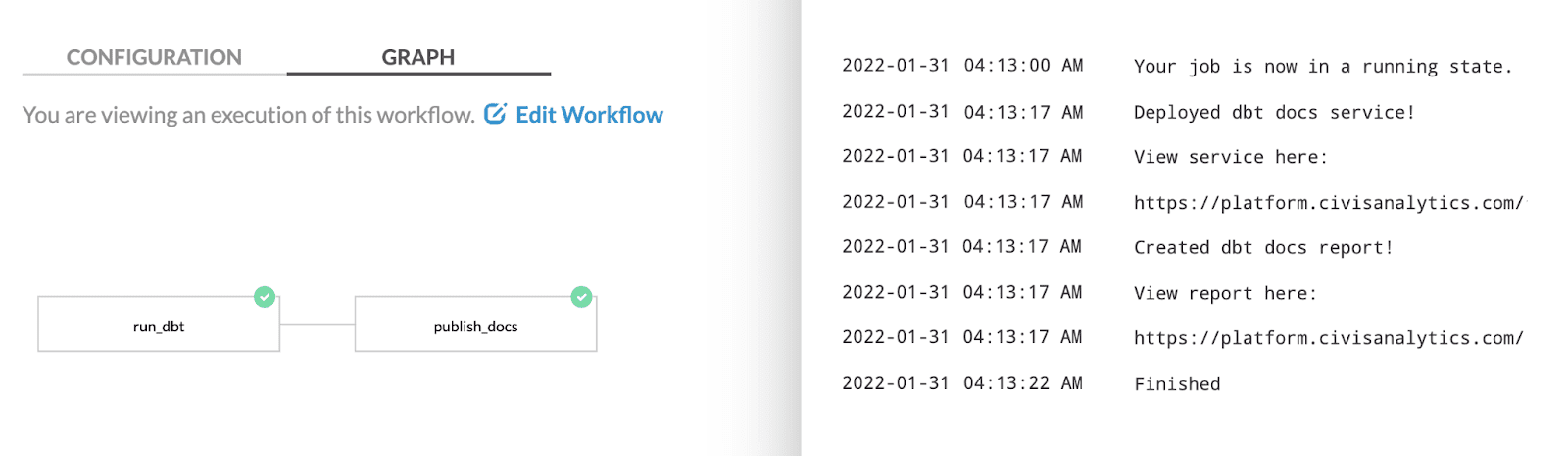

In contrast to the above Platform Workflow, here is what the refactored pipeline looks like after migrating to dbt:

We have one task (run_dbt) for transforming and testing data by executing dbt run and dbt test in a Docker container. We have another task (publish_docs) which deploys a Platform Service for sharing the auto-generated dbt docs.

Notice that at every stage of this pipeline — from creating, to testing, to documenting — a developer only needs to oversee the “what.” (What has been created? What should I test? What will be documented?) We leave the “how” up to dbt. Incidentally, dbt is much better at the “how” than we ever were, and our pipeline stability and maintainability has improved as a result.

The only shortcoming with our new Platform Workflow design is that failed dbt runs can be more challenging to debug. When a Platform SQL script fails (such as in our old Workflow), a developer can inspect the raw SQL directly in the UI, which can speed up debugging. However, when a dbt run fails, a developer gets a helpful error message, but the compiled SQL is not immediately accessible.

My team’s workaround was to write a small Python script that parses the dbt target manifest.json and run_results.json files, in the event of an error, and posts the compiled SQL to a new Platform SQL script. This allows developers to debug SQL generated by dbt directly in a web browser, as shown below.

This is one of many examples of the extensibility of Civis Platform to facilitate data pipeline development.

Conclusion

As I’ve demonstrated, Civis Platform is a general purpose software solution for data teams who need a centralized, secure, reliable, and friendly interface for moving and modeling data. Platform Workflows, specifically, are useful for orchestrating batch ETL (or ELT) tasks and offer a tangible layer of abstraction for developers to understand and interact with their pipeline architecture. Platform Workflows are not perfect, but where Workflows fall short, complementary tooling such as dbt can be easily integrated so that data teams can leverage the full modern data stack.

Footnotes

- Coalesce 2021: Nik Bladey, Human in the loop data processing.

- Coalesce 2022: Emma Peterson, So You Think You Can DAG: Supporting data scientists with dbt packages.

- Coalesce 2022: Evelyn Stamey, Inclusive Design and dbt.

- Stylized in lowercase per dbt Labs’ request