For our customers, some of their richest information lives in unstructured text: open-ended survey responses, donor notes, grant abstracts, resumes, support tickets, customer feedback, employee comments, etc.

Organizations collect this every day, yet it is rarely used because it is too hard to work with at scale. One-off scripts break. Keyword tagging is insufficient. Results show up late, if at all. The hurdle is not collection. It is turning text into trustworthy, analysis-ready data on a regular cadence.

Today I’m excited to announce the release of Taxonomer, a new Civis tool for data teams that turn unstructured text into analysis‑ready data at scale.

LLMs understand text well, but our early pilots hit two roadblocks. Outputs stayed siloed from the analytics stack, and people did not trust the model because there was no simple way to see what it was doing or improve it.

Taxonomer closes both gaps. You define the categories that matter, iterate in a tight human-in-the-loop workflow, and write results straight to your database so the rest of your analytics stack can use them.

How it works

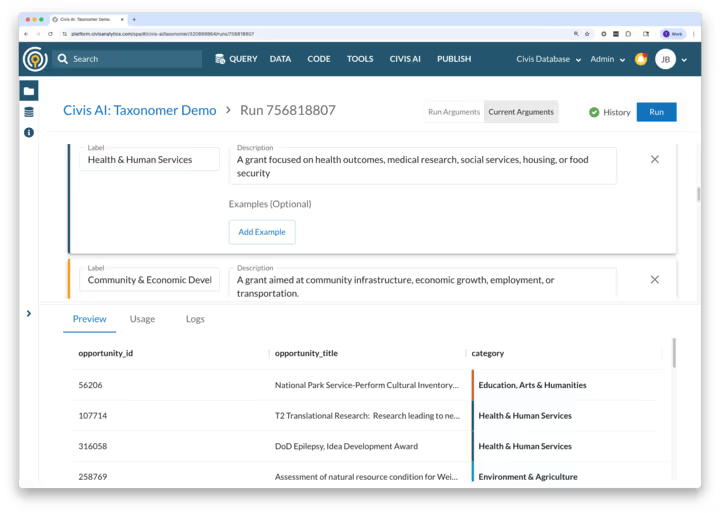

Taxonomer categorizes text stored in your Civis database into labels you choose. You point it at a table and a text column, define your categories, preview results on a sample, refine as needed, then process the full dataset to a new table for downstream reporting and modeling. It supports multiple leading models, including Meta Llama 4 and Anthropic Claude, and can be automated in Civis Workflows or called through the API.

Human-in-the-loop, by design

Good text classification requires context. In Taxonomer, you provide it in three ways:

- Add a brief “text description” so the model knows what kind of language to expect.

- Create clear category labels and one‑sentence descriptions.

- Optionally add a few examples to teach edge cases.

You can start by previewing the results of a sample of rows. If something looks off, tweak a definition or add an example, then re‑run. In our user testing, this was the most important step. Users need to be able to trust the results, and this ‘preview and adjust’ iteration process gives users a quick understanding of how the LLMs think about their data.

Once the sample looks right, run the full job and write the results to a table. We also recommend human review for high‑stakes use cases. This is how you get reliable quality without weeks of manual coding.

Embedded in your workflows

Taxonomer lives where your data already lives. Inputs come from your Civis‑managed Redshift or your connected warehouse. Outputs are clean tables you can join to facts and dimensions, feed into dashboards, trigger follow‑ups, or use in modeling pipelines. You can schedule jobs in Civis Workflows and manage them like any other script.

What makes this useful

- Fully customize the categorization schema. Labels mirror how your team talks about programs, donors, customers, or staff.

- Iterate fast. The preview loop makes it easy to catch mistakes and teach the model with examples.

- Get analysis‑ready tables. Results land in your warehouse with IDs so you can join back to source rows and keep a clean audit trail.

- Automate. Run nightly, attach to new survey waves, or kick off after an import finishes, all in Workflows or via the API.

Case study: Classification at community scale

Climate Advocacy Lab needed to categorize more than thirteen thousand digital ads to compare performance across organizations. With Civis, manual coding that took months was completed in hours. Accuracy jumped from a 70 percent first pass to 88 percent with light tuning, and most importantly, they were able to learn the specific details of what made content most compelling for their audience.

Get started today

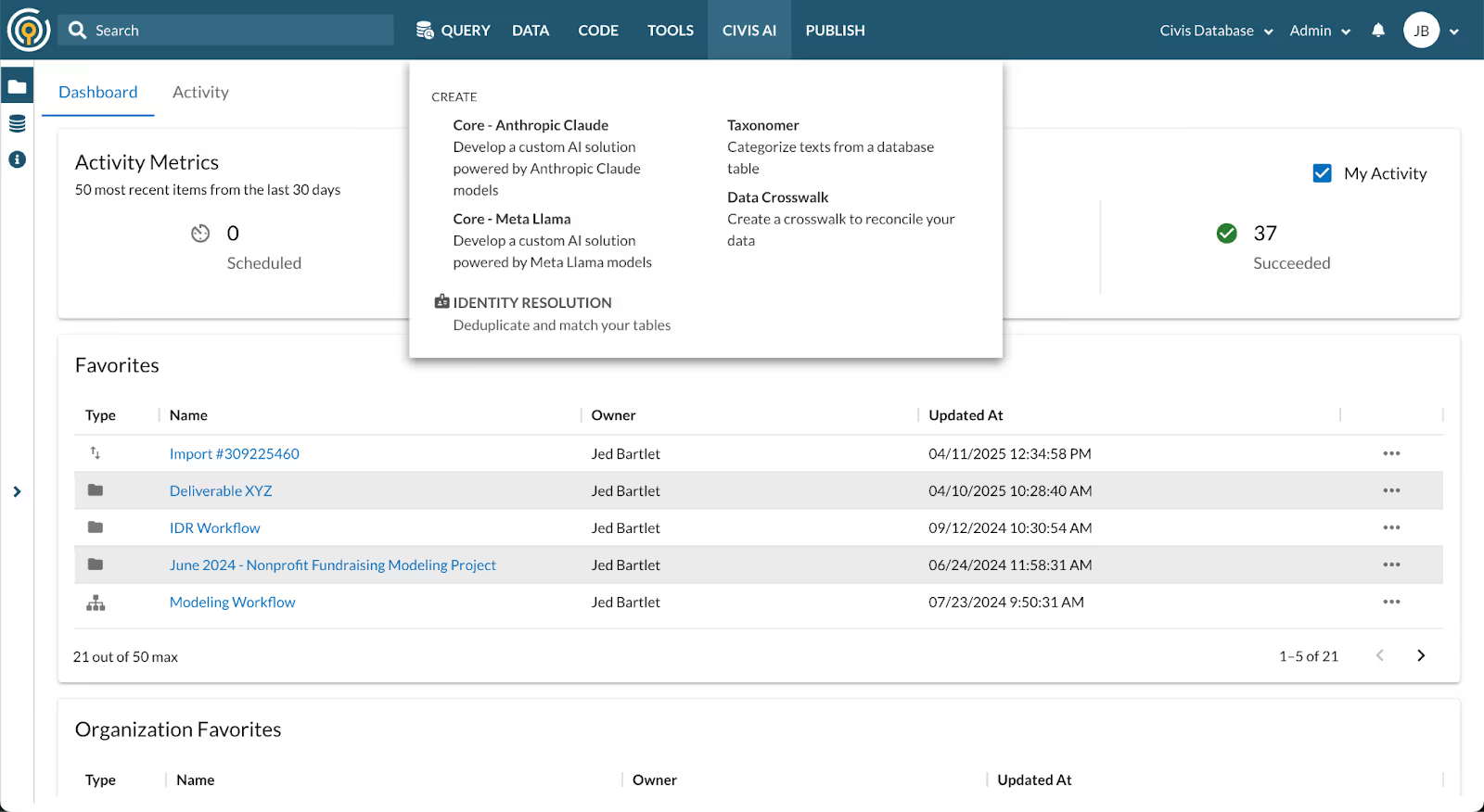

All Civis customers have access to Taxonomer today. Try it out by going to "Taxonomer" in the "Civis AI" menu in Civis Platform. Want a deeper walkthrough or help choosing models and cost controls? Read the Taxonomer support guide, then reach out to your Civis team to set up a working session.

PS: If you are new to AI features in Civis, check out the SQL AI Assist for another example of how we think about human‑guided AI inside the platform.

.avif)